Change your language and you change your thoughts.” – Karl Albrecht

Card sorting is a specialized type of user test that is useful for assessing how people group related concepts and what common terminology they use. At its simplest form, a card sort is the processes of writing the name of each item you want to test on a card, giving the cards to a user and asking him or her to group like items into piles. There are however, a number of advanced options and different types of card sorting techniques.

Open Card Sort – An “open” card sort is when you do not provide users with an initial structure. Users are given a stack of index cards, each with the name of an item or piece of content written on it. They are then asked to sort through and group the cards, putting them into piles of like-items on a table. Users are then asked to categorize each of the piles with the name that they think best represents that group/pile. Open card sorts are usually conducted early on in the design process because it tends to generate a large amount of wide ranging information about naming and categorization.

Given the high burden on participants and researchers, I personally find an open card sort to be the least attractive method for most contexts. It is, however, the most unbiased approach. As a general rule, I would reserve this method for testing users with a high degree of expertise in the area being evaluated, or for large scale exploratory studies when other methods have already been exhausted.

Closed Card Sort – The opposite of an open sort, a “closed” card sort is when the user is provided with an initial structure. Users are presented with a set of predefined categories (usually on a table) and given a stack of index cards of items. They are then asked to sort through the cards and place each item into the most appropriate category. A closed sort is best used later in a design process. Strictly speaking, participants in a closed sort are not expected to change, add, or remove categories. However, unless the context of your study prevents it, I would recommend allowing participants to suggest changes and have a mechanism for capturing this information.

Reverse Card Sort – Can also be called a “seeded” card sort. Users find information in an existing structure, such as a full site map laid out on index cards on a table. Users are then asked to review the structure and suggest changes. They are asked to move the cards around and re-name the items as they see fit. A reverse card sort has the highest potential for bias; however, it’s still a relatively effective means of validating (or invalidating) a taxonomic structure. The best structures to use are ones that were defined by an information architect, or someone with a high degree of subject matter expertise.

Modified Delphi Card Sort (Lyn Paul 2003) – Based on the Delphi Research Method[1], which in simple terms refers to a research method where you ask a respondent to modify information left by a previous respondent. The idea is that over multiple test cycles, information will evolve into a near consensus with only the most contentious items remaining. A Modified Delphi Card Sort is where an initial user is asked to complete a card sort (open, closed, or reverse), and each subsequent user is asked to modify the card sort of their predecessor. This process is repeated until there is minimal fluctuation, indicating a general consensus. One significant benefit of this approach is ease of analysis. The researcher is left with one final site structure and notes about any issue areas.

Online Card Sort – As the name implies, this refers to a card sort conducted online with a card sorting application. An online card sort allows for the possibility of gathering quantitative data from a large number of users. Most card sorting tools facilitate analysis by aggregating data and highlighting trends.

Paper Card Sort – A paper sort is done in person, usually on standard index cards or sticky notes. Unlike an online sort, there is opportunity to interact with participants and gain further insight into why they are categorizing things as they are.

Why Use Card Sorting?

- Card sorting is a relatively quick, low cost, and low tech method of getting input from users.

- Card sorting can be used to test the efficacy of a given taxonomic structure for a Web site or application. While commonly used for website navigation, the method can be applied to understand data structures for standalone applications as well.

- When designing new products or major redesign efforts.

- When creating a filtered or faceted search solution, or evaluating content tags

- For troubleshooting, when other data sources that indicates users might be having a hard time finding content.

When is Card Sorting Most Useful?

Development Life-cycle

Card sorts are useful in the requirements gathering and design stages. Depending on where you are in the design process you may get more or less value from a given method (open, closed, reverse, etc).

Limitations of Card Sorting

- The results of card sorting can be difficult and time consuming to analyze; results are rarely definitive and can reveal more questions than answers.

- The results of a card sort will not provide you with a final architecture; it will only give you insight possible direction and problem areas.

How to Conduct a Card Sort

Card sorts are one of those things that are somewhat easier to conduct than to explain. Because there are so many variations, I’ve decided to illustrate the concept with a walkthrough of an actual project case study. I was recently brought into a card sorting study by a colleague of mine[2] who was working on a complex taxonomy evaluation. The project was for a top toy retailer’s e-commerce site. After weeks of evaluating traffic-patterns and other data, my colleague had developed what he hoped would be an improved new site structure. He wanted to use card sorting techniques to validate and refine what he had developed.

- Define your research plan. Our research plan called for some online closed cards sorts to gather statistically relevant quantitative data, as well as the rather innovative idea to go to one of the retail locations and recruit shoppers to do card sorting onsite. The in-store tests would follow a reversed sort, using the modified Delphi method. I.e. Shoppers would be shown the full site structure and asked to make changes. Each shopper would build off of the modifications of the previous shopper until a reasonable consensus was achieved.

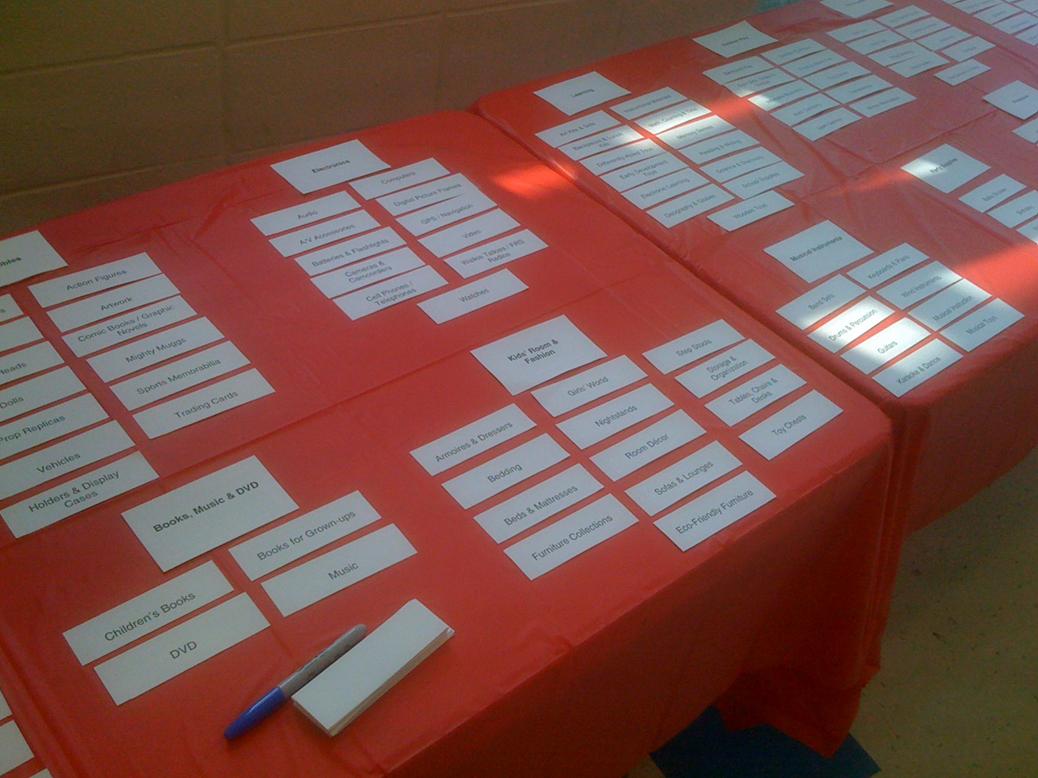

- Prepare your materials. In the case of in-store card sorts, we needed to take the newly defined top and second level navigation categories and put each on its own index card. The cards would be laid out on two long banquet tables so participants could see the structure in its entirety. Single page reference sheets of the starting navigation were printed up so we could take notes on each participant and track progressive changes. We had markers and blank index cards for modifications. A video camera would be used to record results, and a participant consent form was prepared.

- Recruit Participants. Unlike lab-based testing where you have to recruit participants in advance, the goal for the in-store testing was to directly approach shoppers. The idea was that not only would they be a highly targeted users group, but that we would be approaching them in a natural environment that closely approximated their mindset when on the e-commerce site i.e. shopping for toys. Because we would be asking shoppers to take about 10-20 minutes of their time, the client provided us with gift cards which we offered as an incentive/thank you. Recruitment was straightforward; we would approach shoppers, describe what we were doing and ask if they would like to participate. We attempted to screen for users who were familiar with the client’s website or at least had some online shopping experience.

- Conduct the Card Sort. After agreeing to participate and signing the consent form, we explained to the participant that the information on the table represented potential naming and structure for content on the e-commerce site. Users were asked to look through the cards and call attention to anything they didn’t understand or things they would change. They could move items, rename them or even take them off the table. Initially we let the participant walk up and down the table looking at the cards. Some would immediately start editing the structure, while others we needed to encourage (while trying not to bias results) by asking them what they had come into the store for and where might they find that item, etc. After an initial pass, we would then point out to the participant some of the changes made by previous participants as well as describe any recurring patterns to elicit further opinions. After about 15 participants, the site structure stabilized and any grey areas were fairly clearly identified.

- Prepare the Analysis. At the end of the study, there was a reference sheet with notes on each participant’s session, video of the full study, and a relatively stable final layout. With this data, it was fairly easy to identify a number of recurring themes, i.e. issues that stood out as confusing, contentious, or as a notable deviation from the original structure. As in any card sort, the results were not directly translatable to a final information structure. Rather, they provided insights that could be combined with other data (such as online sorting results) to create the final taxonomy.

Figure 3: Sample Card Sort Display: Cut Index Cards on Table

Additional Resources

- OptimalSort (www.optimalsort.com) – another online card sorting utility.

- WebSort (www.websort.net) – another online card sorting utility.