Conducting a User Experience Audit

“If you would understand anything, observe its

beginning and its development.”– Aristotle

A User Experience (UX) Audit is a secondary research method that pulls together any potentially relevant existing information on your software product and it’s market, and then reviews what you find in the context of your design goals. It’s a straightforward approach I’ve use in just about every strategy project I’ve completed for clients.

This article is intended to illustrate the type of data commonly available that can be helpful. It is by no means an exhaustive list, but should be enough to point you in the right direction. I recommend starting any strategic effort with this approach because it is vital to have a baseline understanding of the market landscape before learning more about the users within that market. In addition, much of the information and insights gleaned from this type of evaluation can be used to directly inform other user research methods, such as persona or survey development. I usually start the audit process as early as possible via Internet research and by requesting client artifacts even when an engagement does not specifically call for a formal “audit”.

Most organizations have existing structures by which they pull in various usage and marketing metrics. However, this data is not usually being evaluated with a user-centric, user behavior mindset. A UX Audit entails skimming through a large volume of data to unearth a relatively small set of relevant informational nuggets. Even still, they are a worthwhile effort, and the audit’s scope can be defined in a manageable way.

Err on the side of collecting more information than less. If a client or stakeholder tells you the content you are requesting is not relevant, it is a good idea to be persistent and review the information for yourself. They might not be looking at with a “UX” mindset.

A UX Audit can be used to answer the following questions:

- What are the current user trends and expectation for this industry/market?

- What have we already tried? Of that, what worked and what didn’t?

- What do our internal stakeholders think about our UX?

What do they think is needed? Why? - What customer issues, needs or problems are indicated in the data? Of those, which might be addressed (in whole or part) by the product’s UX?

- What ongoing metrics are being collected that UX can use in the future?

Why Conduct an Audit?

The most obvious benefit to conducting an audit is to avoid reinventing the wheel, i.e. conducting new primary research when the same information was already available. An equally productive reason is to help you formulate hypothesis about user behavior and/or issues with your product that you can then investigate further. A supplemental benefit is that the process can help you compile an accessible body of UX knowledge for your products that you can build upon over time

When are Audits Most Useful?

- When undertaking the development of a new product.

- Before starting a substantive re-design.

- When starting a UX practice within an organization.

- As an exercise for new staff to ramp into product knowledge.

- If your company has accumulated a large amount of product research data that was conducted by different departments for different uses.

Development Life-cycle

In the context of the software development life-cycle, UX Audits will be most useful if conducted in the high level and detailed requirements gathering stages. Some audit materials can be re-evaluated post production as follow up research to track the effects of the product’s release e.g. customer care data, web analytics, sales data, etc.

Limitations of an Audit

- There is no guarantee that you will find data that addresses any specific questions. Sometimes the data isn’t there or it is too abstract to be useful in the context of UXD.

- It can be time consuming and somewhat overwhelming for the beginner to process the information, especially if audits are rarely conducted.

- Because the audit materials are almost entirely secondary research, you are limited to the methodologies, goals, and potential flaws of the existing research.

- A good audit involves a wide range of information sources. New companies and some industries might have difficulty pulling together sufficiently diverse sources during the first few audits. In some cases research might need to be purchased.

How to Conduct the Audit

The steps to conducting a UX Audit are straightforward.  At a high level, you gather the audit materials together, create a spreadsheet for notes, review the materials, document findings, and then develop your insights or hypothesis for further research.

At a high level, you gather the audit materials together, create a spreadsheet for notes, review the materials, document findings, and then develop your insights or hypothesis for further research.

- Pull together your audit materials. The start of a UX audit is an excellent time to engage colleagues from other departments; you can solicit their help in gathering information and get different groups involved with tracking data over time.

- Stakeholder Interviews – Interviews are a great starting point for a UX Audit and can go along way to help you gather the materials you need. You will want to speak (one on one) with internal stakeholders such as department heads, product managers and lead developers. You might already speak with these individuals, but interviewing them specifically about market landscape and customer issues may not only provide some good insights, but it can go a long way in gaining buy-in and support for your efforts. Be sure to ask each stakeholder for a list of their recommended materials and follow-up to get them.

- Sales Statistics – While primarily used by sales and finance, some of this data can be useful for a UX Audit, particularly if you are reviewing the effectiveness of a lead generating, or e-commerce web site. One thing to look for would be information that indicates a problem with the messaging or help functions of the site. For example, if a site selling window curtains, has a higher ratio of online customers who return curtains they bought online due to “wrong size” than their in-store customers, this might indicate a potential issue with the clarity of size information on the site.

- Call Center Data –Call centers are a great way to gather information about what ticks people off. While much of the information may not be relevant, you can usually gain some key insights about what is missing, or even better, get ideas on what you can proactively improve. As example, the online signup process for a broadband company I worked with had functionality that would tell users if they were eligible for services or not. A UX Audit of call center data showed that a percentage of customers who were initially told they were eligible, were actually ineligible after a closer review of their order details. While we were unable to resolve this programmatically in the short term, armed with this understanding, we were able to modify functionality and messaging to more appropriately set expectation for users.

- Web Analytics – Quantitative web analytics will give you insight into how many people are visiting your web site, where they are coming from, what they are looking at, and some trends over time. Advanced analytical tools can be implemented and mined to give ever-increasing detail about what people are doing once they get to your site, where they tend to drop off, and where they go once they leave. I’ve had at least one corporate client who were not mining their web logs. Luckily, the data was being collected, just not used within an analytics software. We were able to get them setup with an appropriate package that allowed the team to view historical and ongoing site usage.

- Adoption Metrics – Feature adoption/usage metrics are a good way to assess the efficacy of desktop and/or web-based operational support system. These metrics can be system-tracked, but in some cases need to be manually investigated. While fairly easy for a SaaS or mobile provider, if you are a desktop application provider, you might only be able to get your customer adoption metrics through surveys or interviews if these monitoring touch-points have not been build into your system.

- Feedback and Surveys Results – Many Marketing groups put out feedback forms and/or have released campaign-specific user surveys. These are usually not UX focused, but can offer some insights into your user’s preferences, attitudes and behaviors. Take some time to scan the comment fields and categorize them if possible. You can turn this information into useful statistics with supplemental anecdotes. E.g. “20% of user comments referred to difficulty finding something”. “I can’t find baby buggies, do you still sell them?”

- Past Reviews & Studies – Any internal market research, usability studies, ethnographic studies, or expert reviews[1] conducted should be audited. Even if a study was conducted for a previous release or under a different context than your project, an audit may reveal some key informational gems and so are worth scanning through. In addition to your own critical eye, it is a wise idea to find out if others in the organization valued the research and why.

- The Twitterverse and Blogosphere – While not relevant for all companies, review sites, blogs, Facebook, Twitter, and other social networking sites can offer a unique and unfiltered view of how customers perceive your software or website. Try searching your company or product’s name on Google and other sites to see what information is returned. Some of the social networking sites even offer functionality that helps you keep track. If people are talking, you may want to add this type of task to your research calendar at consistent intervals.

- Specifications – Take a look at product functional specifications, roadmaps, and business analyses. Anything generated relatively recently that can give some background insight into why certain feature or functions have been developed might prove useful. Many of these documents have some relevant facts about users that were researched by the authors. At best it will save you some of your own research time, at worst you’ll have a better understanding of why certain decisions were made for what exists today.

- Market Research – While market research might give insight into user demographics, this type of research is usually not directly translatable into how you should design your product. However, it can help you

develop hypotheses about what might work and provide a framework for user personas and user narratives. These hypotheses can be tested through other research methods. Market Research can help you make a reasonable guess at things such as; users’ technology skill level, initial expectations, or level of commitment to completing certain tasks.

- Create a Spreadsheet. Create a spreadsheet listing all of the materials you will be auditing. You can use this as a means of tracking what was reviewed, and by whom if more than one person is working on the audit. The spreadsheet can also be used as a central place to put your notes, facts, insights, ideas and questions generated by the review of each audit material.

- Review the Materials. Review materials for any relevant information, updating your spreadsheet as you progress. While it sounds daunting, the audit process can be a fairly cursory review, you don’t need to view every bit of detail—just scanning can be sufficient. Remember, you are only trying to pull out the 10-15% of data that will be relevant to the goals of your project.

- Categorize Findings. After you’ve completed the review portion of the UX Audit, it’s time to clean up your spreadsheet notes, analyze the information, and categorize any findings. Try to distill what you’ve learned into high-level concepts that are supported by data points and anecdotes, followed by your hypothesis. An oversimplified example of categorized findings would be:

- Schedule a Read-out. Take time to present your findings, setup a read-out for your colleagues. After conducting the read-out, publish your documentation on the intranet, to a wiki, in a document management system or on a file share. Let people know where you’ve placed the information. Now is a good time to tentatively schedule the next Audit on your research calendar.

Category – Way Finding (Users ability to find things) Data- 20% of feedback comments referred to users not being able to find what they are looking for.

– A recent study indicates that if users can’t find an item within 3 minutes they leave the site.

Anecdote

“I can’t find baby buggies, do you still sell them?”

Hypotheses

We might have an issue with our site’s navigation or taxonomy. We might need a search function.

Additional Resources

- Pew Internet Life (www.pewinternet.org) – Internet research, ongoing

- Omniture (www.omniture.com) – robust analytics package

- Web Trends (www.webtrends.com) middle range analytics package

- Google Analytics (analytics.google.com) – analytics with useful functions

- Forrester (www.forrester.com) – Market research

- ComScore (www.comscore.com) – Market research

Questions About This Topic?

I’m happy to answer more in-depth questions about this topic or provide further insight into how this approach might work for you in your company. Post a comment or email me at dorothy [at] danforthmedia [dot] com

ABOUT DANFORTH MEDIA

ABOUT DANFORTH MEDIA

Danforth is a design strategy firm offering software product planning, user research, and user centered design (UCD). We provide credible insights and creative solutions that allow our clients to deliver successful, customer-focused products. Danforth specializes in leveraging user experience design (UXD), design strategy, and design research methodologies to optimize complex multi-platform products for the people who use them.

We transform research into smart, enjoyable, and enduring design.

www.danforthmedia.com

[1] Common term used to describe a usability evaluation conducted by a UX specialist.

Article’s ‘Audit All The Things” image source.

Tips for Establishing User Research in an Organization

Q. How was God able to create the world in seven days?

A. No legacy system.

Unlike “God” in this corny systems integrator joke, our realities constantly require us to deal with legacy; systems, processes, and attitudes. This article discusses some critical success factors for getting back credible, valuable research data. It also provides some ideas on project management and obtaining stakeholder buy in. Many of these tips come directly from real project experiences, so examples are provided where applicable.

Be Prepared for Setbacks

A good friend and mentor of mine once told me—after a particularly disappointing professional setback—that it’s quite possible to do everything the “right” way and for things to still not work out. What he was saying, in short, is that not everything is in our control. This understanding liberated me to start looking at all my efforts as a single attempt in an iterative process. As a result, when starting something new to me, I tend to try out a range of things to gauge a baseline for what works, what doesn’t, and what might have future potential. Anyone who has adopted this approach knows that as time passes, a degree of mastery is achieved and your failure percentage decreases. This doesn’t occur because you are better at “going by the book”. It happens because you get better at identifying and accounting for things outside your control.

That said, whether you are trying to introduce a UXD practice into your organization, or are just looking to implement some user research, the most realistic advice I can offer is the Japanese adage; “nanakorobi yaoki” or “fall down seven, get up eight.”

Account for Organizational Constraints

You don’t need a cannon to shoot down a canary. Be realistic; consider the appropriateness of your research methods in context with organization’s stage and maturity. It will not matter how well-designed or “best practice” your research is if the results cannot be adequately utilized. Knowing where you are in the lifecycle of a company, product, and brand will help set expectations about results. In this way, a product’s user experience will always be a balance of the needs of the user with the capacity of an organization to meet those needs. If you are developing in a small startup with limited resources, your initial research plans may be highly tactical and validation focused. You’ll probably want to include plans that leverage family and friends for testing, and rely heavily on existing third party or purchased research. Alternately, a larger organization with a mature product will need to incorporate more strategic, primary research as well as have use for more sophisticated methods of presenting and communicating research to a wide range of stakeholders.

Foster a Participatory Culture

Want buy-in? Never “silo” your user research.

Sometimes, particularly in large corporations, there can be a tendency for the different departments to silo or isolate their knowledge. This can be for competitive “Fiefdom Syndrome”[1] reasons, or more often than not, simply a lack of process to effectively distribute information. However many the challenges, there are some very practical, self-serving reasons to actively communicate your UXD processes and research. First, because anyone in your organization who contributes to the software’s design is likely to have an impact on the user experience, it’s your job to ensure that those people “see what you see” and are empowered to use the data you find. Second, UXD is an art, and like anything else with a degree of subjectivity you’ll need credibility and support if you want your insights and interpretations accepted. Lastly, UXD is complex process with many components; you will get more done faster if you encourage active company wide participation.

Tips on fostering participation:

- Identify parts of your UX research that could be performed by other functional areas E.g. surveys done by Customer Care, usability guidelines for Quality Assurance, additional focus group questions for Marketing

- Offer other areas substantive input into user testing, surveys, and other research. E.g. Add graphic design mockups into a wireframe testing cycle and test these with users separate from the wireframes

- Discuss process integration ideas with the engineering, quality assurance, product, editorial, marketing and other functions. Make sure everyone understands what the touch-points are.

- In addition to informing your own design efforts, present user research as a service to the broader organization; schedule time for readouts, publish your findings, and invite people to observe testing sessions.

Understand the Goals of your Research

Are you looking to explore user behaviors and investigate future-thinking concepts? Or, are you trying to limit exploration and validate a specific set of functionality? There is a time and place for both approaches, but before you set out on any research effort it is important that you determine the overarching goal of your research. There are some distinct differences in how you implement what I’ll call discovery research vs. validation research—each of which will produce different results.

- Discovery Research, which can be compared to theoretical research, focuses on the exploration of ideas and investigating users’ preferences and reactions to various concepts. Discovery research is helpful for new products, innovations, and some troubleshooting efforts. This type of research can compliment market research, but unlike focus groups, UXD discovery research explores things such as unique interaction models, or user behaviors when interacting with functionality specific to search or social media.

- Validation Research, which can be compared to empirical research, focuses more on gauging users’ acceptance of a product already developed, or of a high or low-fidelity prototype that is intended to be a design that will guide development. While a necessary aspect of the UXD process, validation research tends to be more task-based and less likely to call attention to certain false assumptions or superseding flaws in a systems design than discovery research might. An example of a false assumption that might not be revealed in validation research is the belief that an enhanced search tool is necessary. The tool itself may have tested very well, but the task-specific research method failed to reveal that the predominant user behavior is to access your site’s content through a Google search. Therefore, you might have been better off enhancing your SEO before investing in a more advanced search.

Crafting Your Research Strategy

Just as in any project effort, it is vital to first define and document your goals, objectives and approach. Not only does this process help you make key decisions about how you want to move forward, it will serve as your guidepost throughout your project, helping you communicate activities to others. After the research is conducted it provides credibility to your research by explaining your approach. A well -crafted research strategy provides an appropriate breadth and depth for a more complete understanding of what we observe. Consider small incremental research cycles using various tactics. An iterative, multi-faceted methodology allows for more cost efficient project life-cycles. It also mitigates risk since you only invest in what works.

Figure 2: an example of a “Discovery” research strategy developed for a media company. The strategic plan consisted of; audits, iterative prototypes, user testing & various events.

Consider a Research Calendar

A research calendar can help you manage communication as well as adapt to internal and external changes. It can help track research progress over time, foster collaboration, reduce redundancy, and integrate both team and cross-departmental efforts. A good research calendar should be published, maintained, and utilized by multiple departments. It should include recurring intervals for items such as competitive reviews and audits, calibrated to the needs of your product. Your calendar doesn’t have to be fancy or complicated; you can use an existing intranet, a company-wide Outlook calendar or even a public event manager such as Google or Yahoo! calendar. Regardless of the tool, your research calendar can help prevent people from thinking about user research as a “one up” effort. User research should be considered a living, evolving, integral part of your development process—a maintained research calendar with a dedicated owner appropriately conveys this. If your company is small or you are just getting started with user research, consider collaborating with other departments to include focus groups, UAT events, and QA testing to the calendar as well. Not only will it foster better communication it may also result in the cross pollination of ideas.

Be Willing to Scrap Your “Best” Ideas.

It’s easy to become enamored with an idea or concept, something that interests us, or “feels” right—despite the fact that the research might be pointing in a different direction. Sometimes, this comes from a genuine intuitive belief in the idea, other times it’s simply the result of having invested so much time and/or money into a concept that you’re dealing with a type of loss aversion bias[2]. Even after years of doing this type of research, I have to admit this is still a tough one for me, requiring vigilance. I have seen colleagues, whom I otherwise hold in high regard, hold on to ideas regardless of how clear it is that it’s time to move on. This tendency, to find what we want to find and to structure research to confirm our assumptions, is a always a possibility in user research. And while it can be

mitigated by process and methodology, it still takes a degree of discipline to step back and play devil’s advocate to your best, most fascinating ideas and look at them in the harsh light of the data being presented to you. Ask yourself: am I observing or advocating? Am I only looking at data that supports my assumptions, casting a blind eye to anything that contradicts? It can be a painful process, but if the idea can hold up to objective scrutiny, you might actually be on to something good.

Questions About This Topic?

I’m happy to answer more in-depth questions about this topic or provide further insight into how this approach might work for you in your company. Post a comment or email me at dorothy [at] danforthmedia [dot] com

ABOUT DANFORTH MEDIA

ABOUT DANFORTH MEDIA

Danforth is a design strategy firm offering software product planning, user research, and user centered design (UCD). We provide credible insights and creative solutions that allow our clients to deliver successful, customer-focused products. Danforth specializes in leveraging user experience design (UXD), design strategy, and design research methodologies to optimize complex multi-platform products for the people who use them.

We transform research into smart, enjoyable, and enduring design.

www.danforthmedia.com

[1] Herbold, Robert. The Fiefdom Syndrome: The Turf Battles That Undermine Careers and Companies – And How to Overcome Them. Garden City, NY: Doubleday Business, 2004.

[2] “Loss Aversion Bias is the human tendency to prefer avoiding losses above acquiring gains. Loss aversion was first convincingly demonstrated by Amos Tversky and Daniel Kahneman.” -http://www.12manage.com/description_loss_aversion_bias.html

Conducting a Solid UX Competitive Analysis

“Competition brings out the best in products

and the worst in people.” – David Sarnoff

Most people are familiar with the concept of a competitive analysis; it’s a fairly standard business term to describe identifying and evaluating your competition in the marketplace. In the case of UXD, a competitive analysis is used to evaluate how a given product’s competition stacks up against usability standards and overall user experience. A comparative analysis is a term I’ve often used to describe the review of applications or website that are not in direct competition with a product, but may have similar processes or interface elements that are worth reviewing.

Often, when a competitive review is conducted, the applications or websites are reviewed against a set of fairly standard usability principles (or heuristics) such as layout consistency, grouping of common tasks, link affordance, etc. Sometimes, however, the criteria can be more broadly defined to include highlights of interesting interaction models, notable functionality and/or other items that might be useful in the context of the product being designed and/or goals of a specific release.

The Expert Review

Competitive reviews can be done in conjunction with an “expert” review which is a usability evaluation of the existing product. If doing both a competitive and expert review, it’s helpful to start out with the competitive review and then conduct the expert review using the same criteria. Completing the competitive review first allows you to judge your own product relative to your competition.

Why Conduct a Competitive Analysis?

- Understand how the major competition in your space is handling usability

- Understand where your product stands in reference to its competition

- Idea generation on how to solve various usability issues

- Get an idea of what it might take to gain a competitive edge via usability/UX

- If a thorough competitive review has never been conducted.

- When a new product, or major game-changing rebuild is being considered.

- Annually or bi-Annually to keep an eye on trends in your industry and on the web (such as changes in how social networking sites are integrated)

When is a Competitive Analysis Most Useful?

Competitive analysis is best done during early planning and requirements gathering stages. It can be conducted independent from a specific project cycle, or if used with a more focused criteria, it can help with the goals for a specific release.

Limitations of a Competitive Analysis

- A competitive analysis can help you understand what it will take to come up to par with your competitors; however, it cannot show you how you can innovate and lead.

- Insights can be limited by the knowledge level and/or evaluation abilities of the reviewer.

- They can be time consuming to conduct and need to be re-done on a regular basis.

How to Conduct a UXD Competitive Analysis

- Select your competition. On average, I would recommend to target no less than five, but no more than ten of your top competitors. The longer the competitive list is, the more difficult it will be to do a sufficiently thorough investigation. In addition, there becomes a point of diminishing returns where there is not much new going on in the space that hasn’t already been brought to light by a previous competitor.

- Define the assessment criteria. It is important to define your criteria before you get started to be sure you are consistent in what you look for during your reviews. Take a moment to consider some of the issues and goals your organization is dealing with and some hypotheses you uncovered during your audit and try to incorporate these into the criteria as possible. Criteria should be specific and assessable. Here is a short excerpt of a criteria used in an e-commerce site evaluation:

i. Customer reviews and ratings?

ii. Can you wish list your items to refer back to later?

iii. In-store pickup option?

iv. Product availability indication?

v. Can you compare other items against each other?

vi. What general shopping features are available? - Create a Spreadsheet. Put your entire assessment criteria list in the first column, and the names of your competition along the top header row. Be sure to leave a general comments line at the bottom of your criteria list; you will use this for notes during individual reviews. Some of the evaluation might be relative (i.e. rate quality of imagery relative to other sites 1-10), so it is particularly helpful to have one spreadsheet as you work through each of your reviews.

- Gather your materials. Collect the competitive site URLs, software, or devices that you will be reviewing so they will be readily available. A browser with tabbed browsing works great for web reviews. A tip for a mobile device application is that often simulators can be downloaded that allow you to display the mobile device software on your computer.

- Start Reviewing. One at a time, go down the criteria list while looking through the application and enter your responses. It can be helpful to use a double screen with the application on one view and the spreadsheet on the other. Take your time and try to be as observant as possible; you are looking for both good and bad points to report. As you review, write down notes on what you liked, what annoyed you and any interesting widgets you see. Take screen captures of interesting or relevant screens as you do each review.

- Prepare the Analysis. Create an outline of the review document including a summary area and a section for each individual review. Paste the assessment results and your notes from the spreadsheet into the document and use as a starting point for writing the report. You may need to grab additional screen captures of specific things that will be in your evaluation.

- Summarize your Insights. Now that you have the reviews done, you can look back what data pops out as most relevant. Some of the criteria results can be translated into summary charts and graphs to better illustrate the information.

- Schedule a Read Out. Take time to present your findings, setup a read-out for your colleagues. You may want to create a very high-level power point presentation of some of the more interesting point’s from your review. After conducting the read-out, publish your documentation and let people know where you’ve placed the information.

Consider this…

When selecting a list of competitors, instead of just asking, who does what we do? Think about user’s alternatives. Ask, who or what is mostly likely to keep users from using our software or going to our website? While not normally thought of as “competition”, alternatives for an operations support system could be an excel spreadsheet or printed paper forms.

Competitive Assessment Rubric

If you don’t have time for a full written competitive analysis, you can evaluate your competition with an assessment rubric. Because it results in a clear ranking, the rubric is a good “at a glance” way of communicating a software’s relative strengths and weaknesses to clients. Some things to note about this evaluation method:

- It is not a scientific analysis; it’s a short-cut for communicating your assessment of the systems reviewed. Like judging a talent competition, subjective ratings (i.e. “eight out of 10”) are inherently imprecise. However, if you use consistent, pre-defined criteria you should end up with a realistic representation of your comparative rankings.

- When creating the assessment criteria it is important to select attributes that are roughly equivalent in value. In the example below, “Template Layouts” and “Browsing & Navigation” were equally important to the overall effectiveness of the sites reviewed.

The following rubric was created to evaluate mobile phone activation sites, but this approach can be adapted to create ranking metrics for any application.

1. First, create the criteria and rating system by which you will evaluate each system.

| 1 – Poor | 2 – Average | 3 – Excellent | |

| Marketing Commerce Integration | Option to “Buy Now” is not offered in marketing pages or users are sent to a third party site. | The option to “Buy Now” is available from the marketing pages but there are some usability issues with layout and transition | The marketing and commerce sites are well integrated and provide users with an almost seamless transition |

| Template Layouts (Commerce) | The basic layout is not consistent from page to page and/or the activity areas within the layout are not clearly grouped by type of user task. | The layout is mostly consistent from page to page and major activity areas are grouped by task type. Some areas with information heavy content or more complex user tasks deviate from the established layout paradigms. | The site shows a high level of continuity both in page to page transitions and task-type groupings. Information heavy content and complex user tasks are well thought out and intuitive relative to the site’s established layout paradigms. |

| Browsing & Navigation | The site lacks a cohesive Information Architecture. Information is not in a clear top-down hierarchy. There are numerous “orphan” or pop-up pages that do not fit within the site structure. Similar content is duplicated in multiple areas or is presented in multiple navigational contexts. | The site has a structured Information Architecture. Secondary and Tertiary navigation items are related to parent elements, but there may be multiple menus unrelated to the broader structure. There may be orphan pages of detail or less relevant information. | The Information Architecture is highly cohesive. Information is structured with a clear understanding of user goals. Everything has a logical place within the architecture; secondary menus are incorporated into the site structure or clearly transitioned. |

| Terminology & Labeling (Commerce) | Terminology and labeling is inconsistent, confusing, or inaccurate. Different terms are used to represent the same concept. Some terms may not adhere to a common understanding of their meanings. | Terminology and labeling is consistent but could be more intuitive. Some unnecessary industry specific terminology or uncommon terms are used. | Terminology and naming is both intuitive and consistent. Only necessary industry specific terminology is used, in context, with help references. |

2. Next, evaluate the competition along with your own system, scoring the results.

|

|||||

| Marketing & Commerce Integration | Template Layouts | Browsing & Navigation | Terminology & Labeling |

Total |

|

| Our System |

2 (Average) |

1 (Poor) |

2 (Average) |

3 (Excellent) |

8 |

| Company A |

3 |

3 |

2 |

3 |

11 |

| Company B |

2 |

2 |

2 |

2 |

8 |

| Company C |

1 |

3 |

1 |

2 |

7 |

| Company D |

2 |

3 |

2 |

3 |

10 |

| Company E |

3 |

… |

|||

| Company F | |||||

| Company G | |||||

| Company H | |||||

3. Once your table is complete, you can sort on the totals to see your rough rankings.

Soliciting Quantitative Feedback

“A successful person is one who can lay a firm foundation

with the bricks that others throw at him or her.” – David Brinkley

User surveys and feedback forms are arguably the easiest and most inexpensive methods of gathering information about your users and their preferences. Like competitive analysis, surveys are also used in a marketing context. However, UXD surveys contain more targeted questioning about how usable a website or software is, and the relative ease with which people can access content. There are a few different types of user surveys. Some are different questioning methods; others approach the user at a different point in time.

Feedback forms – Feedback forms are the most common way to elicit input from users on websites, but they can be implemented for desktop applications. A feedback form is simply a request for input on an existing application or set of functionality. A feedback form can be a single, passively introduced questionnaire that is globally available on a website for users to fill out. They can also be short one- or two-question mini-forms placed throughout an application in relevant places such as a help/support topic, or when an application unexpectedly terminates.

Tips for Feedback:

- Keep it simple. A shorter, simpler survey will be completed more frequently.

- Always offer a comment field or other means for users to type free-form feedback. Comments should be monitored and categorized regularly.

- If your marketing team has already established a feedback form, try to piggy back off of their efforts. If not, consider collaboration. Much of the information collected in a feedback mechanism will be useful to both groups.

Surveys – A survey is not all that different from a feedback form, except that they are usually offered for a limited amount of time, involved some sort of targeted recruitment effort, and the content of the questions are not necessarily related to an existing site or application. Surveys can be implemented in a few ways, depending on the goals of your research.

- Intercept Surveys – Intercept surveys are commonly used on web sites. An intercept survey is a survey that attempts to engage users at a particular point in a workflow, such as while viewing a certain type of content. Intercept surveys usually take the form of a pop-up window or overlay message. Because these are offered at a specific point in a process (e.g. the jewelry section of an e-commerce department store.), the results are highly targeted.

- Online Surveys – Online surveys can be conducted to learn about a website, application, kiosk, or any other type of system. However, the survey itself is conducted online. There are many advantages over traditional phone or in-person surveys, not the least of which the ease of implementation. There are a number of online survey tools available that will make it easy for you to implement your survey in minutes. Most offer real-time data aggregation and analysis.

- Traditional Surveys – There is definitely still a place for surveys conducted in person or on the phone. While a bit more costly and time consuming these methods can reach users who would not otherwise be reachable online or those who normally will not take the time to fill out a survey on their own.

Why Conduct User Surveys?

- User Surveys are an effective, economical method of gathering quantitative input.

- You can poll a very large number of users when compared to the number of users who participate in user testing or other research methods.

- Since surveys (online versions in particular), are usually anonymous, you are more likely to get more honest and forthright responses than from other forms of research.

- Surveys can be implemented quickly, allowing you to test ideas in an iterative process.

When is a Survey Most Useful?

- When you have specific targeted questions that you would like answered by a statistically relevant number of people. Polling attitudes, etc.

- When there is a specific problem that you want to investigate.

- When you want to show the different between internal attitudes and perceptions of an issues verses the attitudes and perceptions of your target market

- When you want direct feedback on a live application or web site.

- When you want to use ongoing feedback to monitor trends over time, and gauge changes and unexpected consequences.

Development Life-cycle

Surveys are useful in the requirements gathering stage as well as follow-up feedback elicitation during the maintenance stage, i.e. post release.

Limitations of User Surveys

- User surveys are entirely scripted. So while skip logic[1] and other mechanisms can offer a degree of sophistication, there is little interactive or exploratory questioning.

- Users usually provide a limited amount of information in any open ended questions.

- Because there is little room to explain or clarify your questions, surveys should be limited to gathering information about tangible, somewhat simplified concepts.

- In the case of passive feedback forms, most people won’t think to offer positive feedback. In addition, not everyone with a complaint will take the time to let you know.

How to Conduct a User Survey

- Define your research plan. As in any research effort, the best starting point will be to define and document a plan of action. A documented plan will be useful even if it’s just a bullet list covering a basic outline of what you will be doing. Some considerations for your user survey research plan are:

- What are the goals of your survey? What are you hoping to learn from participants? Are you looking for feedback on what currently functionality, to explore concepts and preferences, or to troubleshoot an issue?

- Who will you survey? Are you polling your existing users? Do you need to hear from businesses, teens, cell phone users, or some other targeted population?

- How many responses will you target? While even a small number of responses are arguably more valuable than no input at all, you will want to try for a statistically relevant sample size. There are formulas that will tell you how many survey participants are needed for the responses to be representative of the population you are polling. For most common UXD uses, however, 400-600 participants should be sufficient.[2]

- Select a survey tool. There are a number of online survey tools to choose from so you’ll need to select an application that fits your goals and budget. Even if you will be conducting the surveys in person or on the phone, consider using survey software as they offer substantial time savings in processing and analyzing the results. Once you have selected the survey software, take some time to use the system and get to know what options are available. A basic understanding of your survey tool can help reduce rework as you define your questions.

- Develop your survey. This is usually the most time consuming part of the process. While seemingly straightforward, it’s quite easy to phrase questions in a way that will bias responses, or that are not entirely clear to participants—resulting in bad or inaccurate data.

- Define your Questions. Consider the type of feedback you would like to get and start outlining your topics. Do you want to learn how difficult a certain process is? Do you need to find out if people have used or are even aware of certain functionality? Once you have a basic outline, decide on how to best ask each of your questions to get useful results. Some possible question types include:

- Scale – Users are asked to rate something by degree i.e. 1 to 10, Level of Agreement. Be sure to include a range of options and a “not applicable” where relevant.

- Multiple Choice – Users are asked a question and given a number of pre-set responses to choose from. Carefully review multiple choice answers for any potential bias.

- Priority – Users are asked to prioritize the factors that are important to them, sometimes relative to other factors. A great exercise to help users indicate priority is the “Divide the Dollar” where users are asked to split up a limited amount of money by dividing it among a set of features, attributes etc.

- Open Ended – Users are asked a question that requires a descriptive response and are allowed to provide an open response, usually via text entry. Opened ended questions should be used sparingly in this type of quantitative study; it’s difficult to get consistent responses and they are difficult to track and analyze.

- Edit & Simplify. Your goal is to write questions that the majority of people will interpret in the same way. Consider both phrasing and vocabulary. Try to write in clear, concise statements, using plain English and common terms. Your survey should be relatively short and the questions should not be too difficult to answer. In addition, be sure to check and double check grammar and spelling. I once had an entire survey question get thrown off due to one missing letter. We had to isolate the data and re-publish the survey.

- Review for Bias. Review the entire survey, including instructions or introductory text. Consider the sequence of your questions; are there any questions that might influence future responses? Take care not to provide too much information about the purpose of the survey; it might sway user’s responses. Keep instructions clear and to the point. As with all research, you need to be ever vigilant in identifying areas of potential bias.

- Implement & Internally Test. Implement the survey using the survey tool you selected. Next, test the survey internally, this can mean you and a few colleagues, or a companywide involvement. Testing the survey internally will help ensure your questions are clear, without error, and that you are likely to get the type feedback you want.

- Recruit Respondents. Your research plan should have outlined any appropriate demographics for your survey. But now that you are ready to make your survey live, how will you reach them? If you have a high traffic web site or large email list, you can use these to recruit participants. If not, you may need to get creative. Consider recruiting participants through social networking sites, community boards or other venues frequented by your target population.

- Prepare the Analysis. – One of the nice things about the online survey tools is that the analysis is usually a breeze. You may, however, want to put together an overview of some of the insights and interpretations you gathered from the data, e.g. “Since a relatively small number of our customers tend to use social networking sites for business referrals, we may want to lower the priority of getting Twitter integrated onto our website in favor of other planned functionality.” As with all UXD research, present your findings and publish any results.

Would you like a piece of candy?

Yes (95%) No (5%)Would you like a piece of caramel?

Yes (70%) No (30%)The Surgeon General recently came out with a study that shows candy consumption as the most common cause of early tooth loss. Would you like a piece of candy?

Yes (15%) No (85%)

Thinking Ahead

Recruit for future studies. At the very end of your survey, ask users if they’d like to participate in future studies. If they agree, collect their contact details and some additional demographic information. This will help you build up your own database of participants for more targeted future testing. Many users will want to be assured that their information will not be connected to their survey responses, and that you will not resell their data.

Our Users, Ourselves

Data from an internal test can be additionally helpful. Getting feedback from people in your company or department will likely produce biased results. While it’s important to isolate that data before conducting the actual survey, you can use feedback from your internal group to illustrate any differences (or notable similarities) in their responses from those of your users. E.g. 90% of our employees use Twitter, while only 35% of our users do.

Additional Resources

- Sample Size Calculator (www.surveysystem.com/sscalc.htm) – a sample size calculator that will determine the sample size required for a desired confidence interval.

- SurveyMonkey (www.surveymonkey.com) – offers a popular online hosted survey tool that works well for basic surveys.

- SurveyGizmo (www.surveygizmo.com) – is comparable to SurveyMonkey, but offers a somewhat less robust reporting at a slightly lower fee.

- Ethnio (www.ethnio.com) – is primarily a recruitment tool, but offers some basic survey functionality. Ethnio can be used to drive users from your website to another third party survey.

How to Develop Basic User Personas

“Before I can walk in another person’s shoes,

I must first remove my own.” – Brian Tracy

This article discusses the basics for developing of a set of user personas. User personas are not a research method, but a communication tool. They are a visual, creative way to convey the results of research about the users of your software or website. Personas are fictional characters developed to represent aggregate statistical averages to profile a user group. They do not express all types of user or their concerns, but can give a reasonable representation of who might be using your product and what some of their goals or issues might be. Personas are not fixed precise definitions of your users; they are more of an empathy creating tool. They can help you get into the mindset of a user type and communicate that mindset to others.

One created, personas are a way of humanizing your users and providing a canvas on which you can superimpose ideas on how a given user type might interact with your system. A little bit like criminal profiling (minus the crime and vilification), you can think of it as role playing with the intention of gaining new insights into the other party. User Personas should not be confused with engineering use cases or marketing demographic profiling.

Why develop user personas?

- A key benefit to developing user personas is to provide user-centric objectivity during product design, and reinforce the idea that you and your colleagues’ version of a great user experience is not necessarily the same as your end users.

- User Personas can dispel common stereotypes about users, e.g., the site needs to be so easy to use the VP’s grandmother can use it.

- Later in the design process, user personas can help you communicate the usefulness of a specific design to stakeholders.

While I used to be somewhat limited in using persona, I did create them a few years ago for a top-five business school. The project was in the context of a site redesign that included an overhaul of the site’s information architecture. I conducted a fairly exhaustive audit of the MBA space which included a competitive review, expert review, and stakeholder interviews. At the time, I didn’t create personas for myself, I just used the underlying audit data to restructure the site’s content and define a simplified navigational model. However, later, when preparing to present the new information architecture to the client, I took the time to create a set of user personas based on the original data I used to make my design decisions. They were created out of a need to help illustrate to the client how the new structure better addressed the needs of their prospective students, and to assure them that user’s concerns were being addressed. After realizing how effective persona can be for stakeholder buy-in and alignment I have since used them much more frequently in a range of UX projects.

When are personas most useful?

- User personas are useful when there are a number of unsubstantiated assumptions floating around about who your users are. E.g. early adopters, soccer moms, the VP’s grandma, etc.

- When there is a tendency for developers and product managers to make design decisions based on; their own personal preferences, technical constraints, chasing short term sales, or any other reasoning that does not consider the end user.

- When there is a general lack of clarity about who your end user is, or when the data you have regarding your users does not seem to directly translate into insights about their behavior.

- Whenever you need a communication tool to advocate and encourage empathy for end users.

Development Lifecycle

Persona creation is best done during requirements gathering stage. However, getting the most out of your user personas means that they should also be referenced during development and testing, as questions and changes arise. They should also be compared to, and updated against actual user testing and post-release feedback. If properly evolved and maintained, personas can be an effective guidepost throughout the development and product lifecycle.

Limitations of User Personas

- It’s easy for personas to be taken too literally or become a stereotype. Personas should never be considered a definitive archetype of your users, doing so runs the risk of turning your definitions into pseudoscience, akin to phrenology. Direct user testing and feedback insights should take precedence over anything user persona derived.

- User personas are not precise; they are limited by the innate prejudices, interpretations, and reference points of the authors of the personas and any subsequent user scenarios.

- User personas are not an appropriate communication tool for everyone; some people prefer a well crafted presentation of the source data over a persona which can be perceived as containing superfluous data.

How to implement user personas

With a little research, a set of basic personas is actually fairly easy to put together. The first step is to pull research from multiple sources to gather data about potential users. Next, synthesize this data to form user groups and select representative data from each user group to form a user data profile. Finally, add supplemental character information to create a believable persona. As a general rule, you should start with trying to define three to five user types, adding additional types as appropriate. I would recommend against defining more than about eight or 10. Beyond this number, you start to exceed people’s ability to reasonably keep track of the information.

Consider This…

If you think you need more than ten personas, or have already been heavily relying on personas to drive development consider as a next step investing in an ethnographic study. Real profiles and insights derived from the results of an ethnographic study will provide more actuality than fictitious personas.

- Conduct Research. Pull together any reliable data you can find might describe your users or give insight into their goals, concerns or behaviors. Collect any potentially relevant demographics such as; gender, age, race, occupation, household income. Let’s, for example, take the case of developing personas around prospective MBA students. In addition to client interviews I was able to pull a large amount of key data from sources on the Internet. Some references I found were:

- Graduate Management Admissions Council

- GMAC: 2004 mba.com Registrants Survey

- The Black Collegian: ‘The MBA: An Opportunity For Change’

- The Princeton Review: ‘Study of Women MBAs’

- National Center for Education Statistics (NCES)

- Form Relevant User Groups. To continue with the business school case study, research on MBA students showed that there were a growing number of minority women enrolling in MBA schools. In addition, the client indicated a desire to further attract this type of student. Armed with that initial seed data, I defined a user group of minority women who were considering, had applied to, or attended business school. Further research allowed me to compile a list of statistical information about this group (such as average age, current occupation, family status, etc) as well as formulate some ideas about their interests, concerns and expectations. When compared to more “traditional” MBA students, these women were; a few years older on average, more likely to be married with children, and were highly-focused on what specific opportunities this type of degree offered post graduation.

- Create and Embellish the Persona. Start by turning the data you have for the user group into a single individual. The “Minority Women” user group was converted into a thirty six year old, African American woman named Christine. At this point it’s ok and actually preferable to fill in the gaps with plausible details about this person to bring them to life a bit; e.g. “Christine Barnhart is a marketing communications manager who balances her time between work and family…” Since this is in large part a tool to facilitate empathy for the user group, as much realistic information as possible should be incorporated to develop the character. As long as the “filler” is not negated by other data it should be fine. Even still, after you have finished writing your personae take a step back and ask yourself; “Is it reasonable to expect this person to exist?” Alter your description if necessary, or move on to the next persona.

- Develop and Test User Scenarios. Now that you have your personas and an idea of what your user types are, we can make some assumptions about what functionality or information they might find useful. You will need to develop some User Scenarios to help test any hypothesis you have. Unlike a use case, which is a description of how system functionality interacts (i.e. contact data is retrieved, error message is returned), user scenarios focus on the user’s perspective. A simplified User Scenario for our “Christine” persona might look like:

- While successful in her career, Christine is not entirely sure she has the background and experience to be admitted to a top business school. In addition, she has a lot of specific questions about the program’s culture. She is concerned that it may be too aggressively competitive for her to get the most out of her business school experience.Christine goes to the program website and immediate looks at the eligibility and admission requirements. She takes her time looking through the information and prints out a few pages. Next, she starts to browse around through site, trying to get a feel for the culture. She finds a section that has video interviews with students and so she watches a few and begins to get a sense of whether this school is a fit for her or not.

User Stories Not to be interchanged with a user scenario (or use case), a user story is a short two or three sentence statement of a customer requirement. It is customer-centered way of eliciting and processing system requirements within Agile development methodologies[1].

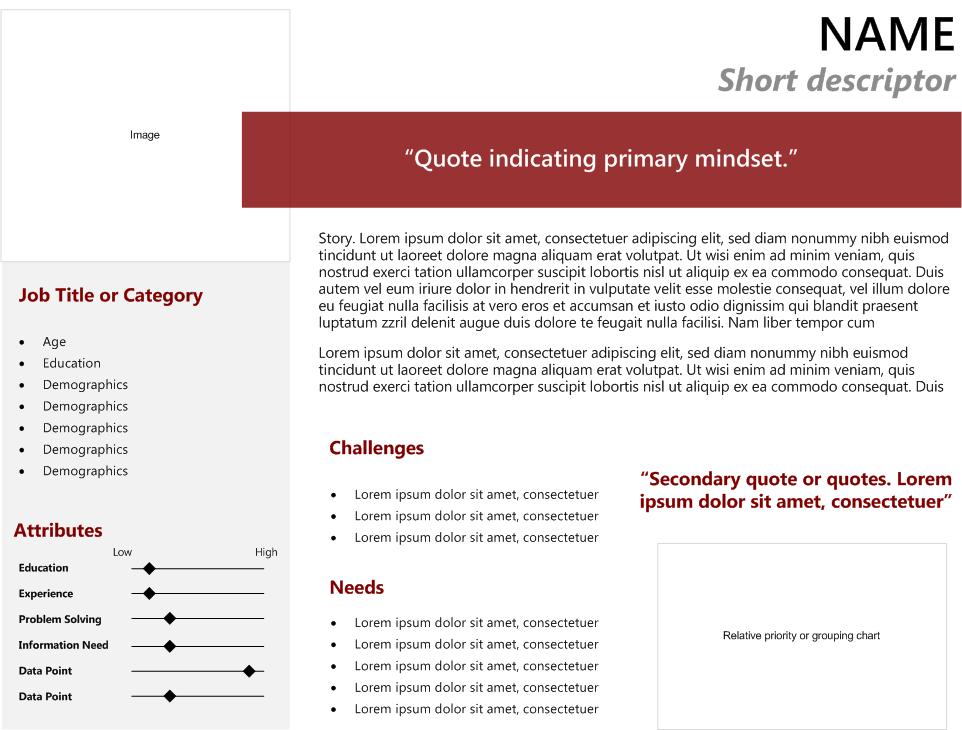

Basic Persona Template

There are a wide range of user persona templates, and they can get rather complicated. Considering that the intention of this type of tool is effective communication, I tend to gravitate toward the clean, simple layouts that are one page or screen. Below is a basic example template. Don’t get too focused on templates however, if you find information that you think is useful or relevant but it doesn’t fit your template, rework the template to fit the data.

[1] More information on user stories can be found at: http://www.extremeprogramming.org/rules/userstories.html

Card Sorting: A Primer

Change your language and you change your thoughts.” – Karl Albrecht

Card sorting is a specialized type of user test that is useful for assessing how people group related concepts and what common terminology they use. At its simplest form, a card sort is the processes of writing the name of each item you want to test on a card, giving the cards to a user and asking him or her to group like items into piles. There are however, a number of advanced options and different types of card sorting techniques.

Open Card Sort – An “open” card sort is when you do not provide users with an initial structure. Users are given a stack of index cards, each with the name of an item or piece of content written on it. They are then asked to sort through and group the cards, putting them into piles of like-items on a table. Users are then asked to categorize each of the piles with the name that they think best represents that group/pile. Open card sorts are usually conducted early on in the design process because it tends to generate a large amount of wide ranging information about naming and categorization.

Given the high burden on participants and researchers, I personally find an open card sort to be the least attractive method for most contexts. It is, however, the most unbiased approach. As a general rule, I would reserve this method for testing users with a high degree of expertise in the area being evaluated, or for large scale exploratory studies when other methods have already been exhausted.

Closed Card Sort – The opposite of an open sort, a “closed” card sort is when the user is provided with an initial structure. Users are presented with a set of predefined categories (usually on a table) and given a stack of index cards of items. They are then asked to sort through the cards and place each item into the most appropriate category. A closed sort is best used later in a design process. Strictly speaking, participants in a closed sort are not expected to change, add, or remove categories. However, unless the context of your study prevents it, I would recommend allowing participants to suggest changes and have a mechanism for capturing this information.

Reverse Card Sort – Can also be called a “seeded” card sort. Users find information in an existing structure, such as a full site map laid out on index cards on a table. Users are then asked to review the structure and suggest changes. They are asked to move the cards around and re-name the items as they see fit. A reverse card sort has the highest potential for bias; however, it’s still a relatively effective means of validating (or invalidating) a taxonomic structure. The best structures to use are ones that were defined by an information architect, or someone with a high degree of subject matter expertise.

Modified Delphi Card Sort (Lyn Paul 2003) – Based on the Delphi Research Method[1], which in simple terms refers to a research method where you ask a respondent to modify information left by a previous respondent. The idea is that over multiple test cycles, information will evolve into a near consensus with only the most contentious items remaining. A Modified Delphi Card Sort is where an initial user is asked to complete a card sort (open, closed, or reverse), and each subsequent user is asked to modify the card sort of their predecessor. This process is repeated until there is minimal fluctuation, indicating a general consensus. One significant benefit of this approach is ease of analysis. The researcher is left with one final site structure and notes about any issue areas.

Online Card Sort – As the name implies, this refers to a card sort conducted online with a card sorting application. An online card sort allows for the possibility of gathering quantitative data from a large number of users. Most card sorting tools facilitate analysis by aggregating data and highlighting trends.

Paper Card Sort – A paper sort is done in person, usually on standard index cards or sticky notes. Unlike an online sort, there is opportunity to interact with participants and gain further insight into why they are categorizing things as they are.

Why Use Card Sorting?

- Card sorting is a relatively quick, low cost, and low tech method of getting input from users.

- Card sorting can be used to test the efficacy of a given taxonomic structure for a Web site or application. While commonly used for website navigation, the method can be applied to understand data structures for standalone applications as well.

- When designing new products or major redesign efforts.

- When creating a filtered or faceted search solution, or evaluating content tags

- For troubleshooting, when other data sources that indicates users might be having a hard time finding content.

When is Card Sorting Most Useful?

Development Life-cycle

Card sorts are useful in the requirements gathering and design stages. Depending on where you are in the design process you may get more or less value from a given method (open, closed, reverse, etc).

Limitations of Card Sorting

- The results of card sorting can be difficult and time consuming to analyze; results are rarely definitive and can reveal more questions than answers.

- The results of a card sort will not provide you with a final architecture; it will only give you insight possible direction and problem areas.

How to Conduct a Card Sort

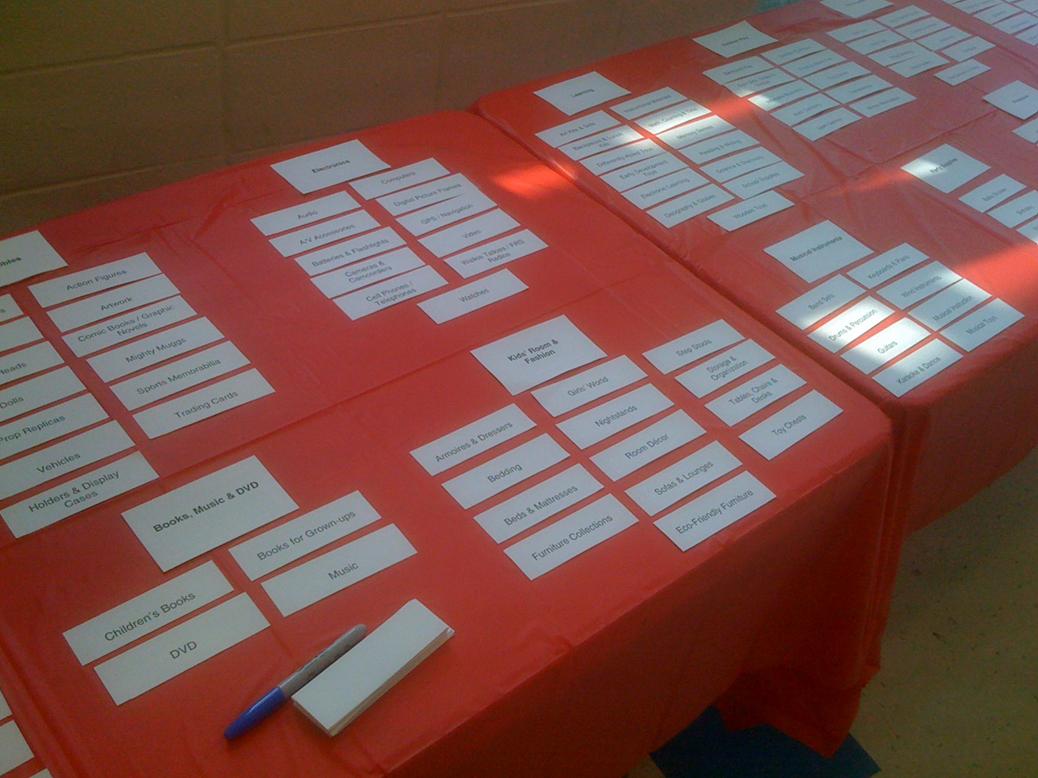

Card sorts are one of those things that are somewhat easier to conduct than to explain. Because there are so many variations, I’ve decided to illustrate the concept with a walkthrough of an actual project case study. I was recently brought into a card sorting study by a colleague of mine[2] who was working on a complex taxonomy evaluation. The project was for a top toy retailer’s e-commerce site. After weeks of evaluating traffic-patterns and other data, my colleague had developed what he hoped would be an improved new site structure. He wanted to use card sorting techniques to validate and refine what he had developed.

- Define your research plan. Our research plan called for some online closed cards sorts to gather statistically relevant quantitative data, as well as the rather innovative idea to go to one of the retail locations and recruit shoppers to do card sorting onsite. The in-store tests would follow a reversed sort, using the modified Delphi method. I.e. Shoppers would be shown the full site structure and asked to make changes. Each shopper would build off of the modifications of the previous shopper until a reasonable consensus was achieved.

- Prepare your materials. In the case of in-store card sorts, we needed to take the newly defined top and second level navigation categories and put each on its own index card. The cards would be laid out on two long banquet tables so participants could see the structure in its entirety. Single page reference sheets of the starting navigation were printed up so we could take notes on each participant and track progressive changes. We had markers and blank index cards for modifications. A video camera would be used to record results, and a participant consent form was prepared.

- Recruit Participants. Unlike lab-based testing where you have to recruit participants in advance, the goal for the in-store testing was to directly approach shoppers. The idea was that not only would they be a highly targeted users group, but that we would be approaching them in a natural environment that closely approximated their mindset when on the e-commerce site i.e. shopping for toys. Because we would be asking shoppers to take about 10-20 minutes of their time, the client provided us with gift cards which we offered as an incentive/thank you. Recruitment was straightforward; we would approach shoppers, describe what we were doing and ask if they would like to participate. We attempted to screen for users who were familiar with the client’s website or at least had some online shopping experience.

- Conduct the Card Sort. After agreeing to participate and signing the consent form, we explained to the participant that the information on the table represented potential naming and structure for content on the e-commerce site. Users were asked to look through the cards and call attention to anything they didn’t understand or things they would change. They could move items, rename them or even take them off the table. Initially we let the participant walk up and down the table looking at the cards. Some would immediately start editing the structure, while others we needed to encourage (while trying not to bias results) by asking them what they had come into the store for and where might they find that item, etc. After an initial pass, we would then point out to the participant some of the changes made by previous participants as well as describe any recurring patterns to elicit further opinions. After about 15 participants, the site structure stabilized and any grey areas were fairly clearly identified.

- Prepare the Analysis. At the end of the study, there was a reference sheet with notes on each participant’s session, video of the full study, and a relatively stable final layout. With this data, it was fairly easy to identify a number of recurring themes, i.e. issues that stood out as confusing, contentious, or as a notable deviation from the original structure. As in any card sort, the results were not directly translatable to a final information structure. Rather, they provided insights that could be combined with other data (such as online sorting results) to create the final taxonomy.

Figure 3: Sample Card Sort Display: Cut Index Cards on Table

Additional Resources

- OptimalSort (www.optimalsort.com) – another online card sorting utility.

- WebSort (www.websort.net) – another online card sorting utility.

How to Prototype for User Testing

“If I have a thousand ideas and only one

turns out to be good, I am satisfied.” – Alfred Bernhard Nobel

UXD prototyping is a robust topic and difficult to adequately cover in this type of overview guide. Therefore, I do so with the caveat that I am only providing the tip of the iceberg to get you started, with some references to where you can learn more.

Prototyping is not a research method, it is a research tool. An important thing to understand about UXD prototypes is that the term “prototype” itself can mean something slightly different to the UXD community than to the software development community at large. For example, one of the more common engineering usages of the term refers to an operational prototype, sometimes called a proof of concept. This is a fully or partially functional version of an application. UXD prototypes are, however, not usually operational. Most often they are simulations focused on how a user might interact with a system. In the case of the “paper prototype”, for example, there is no functionality whatsoever, just paper print-outs of software screens.

There are many different names for types of prototypes in software engineering, most of which describe the same (or very similar) concepts. Here are some of the most common terms:

- Operational – Refers to a fully or partially functional prototype that may or may not be further developed into a production system. User testing prototypes can be operational, such as during late stage validation testing, though a program beta is more commonly used at this stage.

- Evolutionary –As the name implies, an evolutionary prototype is developed iteratively with the idea that it will eventually become a production system. User testing prototypes are not “evolutionary” in the strictest sense if they do not become production systems. However, they can be iterative and evolve through different design and testing cycles.

- Exploratory– Refers to a simulation that is intended to clarify requirements, user goals, interaction models, and/or alternate design concepts. An exploratory prototype is usually a “throw-away” prototype which means it will not be developed into a final system. User testing prototypes would usually be considered exploratory.

Semantics aside, prototypes are probably the single most powerful tool for the researcher to understand user behavior in the context of the product being developed. Some commonly used prototypes in user testing are;

- Wireframes – A wireframe is a static structural description of an interface without graphic design elements. Usually created in black, white and grey, a wireframe outlines where the content and functionality is on a screen. Annotated wireframes are wireframes with additional notes that further describe the screens’ content or interactivity.

- Design Mockups – Similar to wireframes, a design mockup is a static representation of an interface screen. However, design mockups are full color descriptions that include the intended graphical look and feel of the design.

- HTML Mockups –Used in web site design, HTML mockups refer to interface screens that are created in the Hypertext Markup Language and so can be viewed in a browser. Most often, HTML mockups simulate basic functionality such as navigation and workflows. HTML mockups are usually developed with wireframes or a simplified version of the intended look and feel.

- Paper Prototype – A paper prototype is literally a paper print-out of the designed interface screen. A paper prototype could be of wireframes or design mockups. In addition, it could be one page to get feedback on a single screen, or a series of screens intended to represent a user task or workflow.

- PDF Prototype – A PDF Prototype consists of a series of designed interface screens converted into the Adobe Acrobat (.pdf ) file type. Like HTML prototypes, PDF prototypes can simulate basic functionality such as navigation and workflows. However, they take less time to create than HTML prototypes and can be created by someone without web development skills.

- Flash Prototype – A prototype developed using Adobe’s Flash technology. Flash prototypes are usually highly interactive, simulating not only buttons and workflows, but the system’s interaction design as well. In addition to being a quick prototype development method, Flash prototypes can be run via the web or as a desktop application making it very portable tool.

Why Use Prototyping

- It saves money by allowing you to test and correct design flaws before a system is developed.

- It allows for more freedom to explore risky, envelope-pushing ideas without the cost and complexity of developing it.

- Since prototypes are simulations of actual functionality they theoretically bug-free. Test results are less likely to be altered or impeded by implementation issues.

When is a Prototype Most Useful?

- When you are trying to articulate a new design or concept

- When you want to test things in isolation (i.e. graphic design separate from information layout separate from interaction design)

- To gather user feedback when requirements are still in a state of flux and/or can’t be resolved

- To evaluate multiple approaches to the same user task or goal to see which users prefer

Limitations of Prototyping

- A prototype will never be as accurate as testing on a live system; there is always some deviation between the real world and the simulation.

- Depending on where you are in the iterative research process, there is a point of diminishing returns where the amount of effort to create the prototype is better put into building a beta.

Creating a UXD Prototype

Because the actual prototype creation process is highly specific to what you are creating and what tools you are using (paper napkins, layout tool, whiteboard, etc) I’ve included a few considerations for defining a prototype instead of detailing the mechanics of creating one.

- Consider your Testing Goals. – Are you looking to understand how users perform a specific set of tasks? Do you need to watch users interact as naturally as possible with the system? Or are you trying to get users’ responses to various experimental ideas and concepts? The answers to these questions will help you make some key decisions about the structure of your prototype.

- Decide on Degree of Fidelity. – What level of fidelity will the prototype achieve? Here, the term fidelity refers to the degree to which a prototype accurately reproduces the intended final system. A low fidelity prototype might be a PowerPoint deck of wireframes. A high fidelity prototype could be an interactive simulation with active buttons and representative content.A good rule of thumb is to develop the lowest fidelity prototype possible to achieve the goals of your study. This ensures a lack of commitment to the ideas presented and allows more time and money for the recommended iterative process. If a significant amount of time is taken to create an initial prototype with all the bell and whistles, designers are less willing to see when the concept is not working, less likely to change their designs. In addition, the amount of time that goes into building one high fidelity prototype would have been better used building multiple lower fidelity versions that allowed for more testing in-between each revision.

- Scripted Tasks or Natural Exploration? – Another consideration when defining your testing prototype is what content and functionality should be included. Will a preset walkthrough of key screens be sufficient, or do the goals of your study require that users are able to find their own way around the system? On average, I tend to think that enough insight can be gleaned from a series of walkthrough tests and other research methods to warrant using these, leaving the open-ended user-directed tests to be conducted on a product beta or via A/B testing[1] on a live system. With a sufficiently complex system you can quickly hit a point of diminishing returns regarding the amount of effort it takes to simulate functionality vs. actually building it.